TV news are important sources of information for most people. They allow a better understanding of the social and political events punctuating our everyday life. Today, we can save big amounts of digital news videos thanks to the availability of low-cost mass storage technology. As video archives are growing rapidly, making manual video annotation impractical, the need for efficient indexing and retrieval systems is evident. Text displayed in news video is one of the most important high-level information of video content. Actually, it can be used as powerful semantic clues for automatic broadcast annotation. Nevertheless, extracting text from videos is a non-trivial task due to many challenges like the complexity of backgrounds and the variability of text regions in scale, font, color and position. Over the past two decades, interest in this area of research has led to a plethora of text detection and recognition methods. So far, these methods have focused only on few languages such as Latin and Chinese. For a language like Arabic, which is used by more than one billion people around the world, the literature is limited to very few studies.

This thesis aims to contribute to the current research in the field of Video Optical Character Recognition (OCR) by developing novel approaches that automatically detect and recognize embedded Arabic text in news videos.

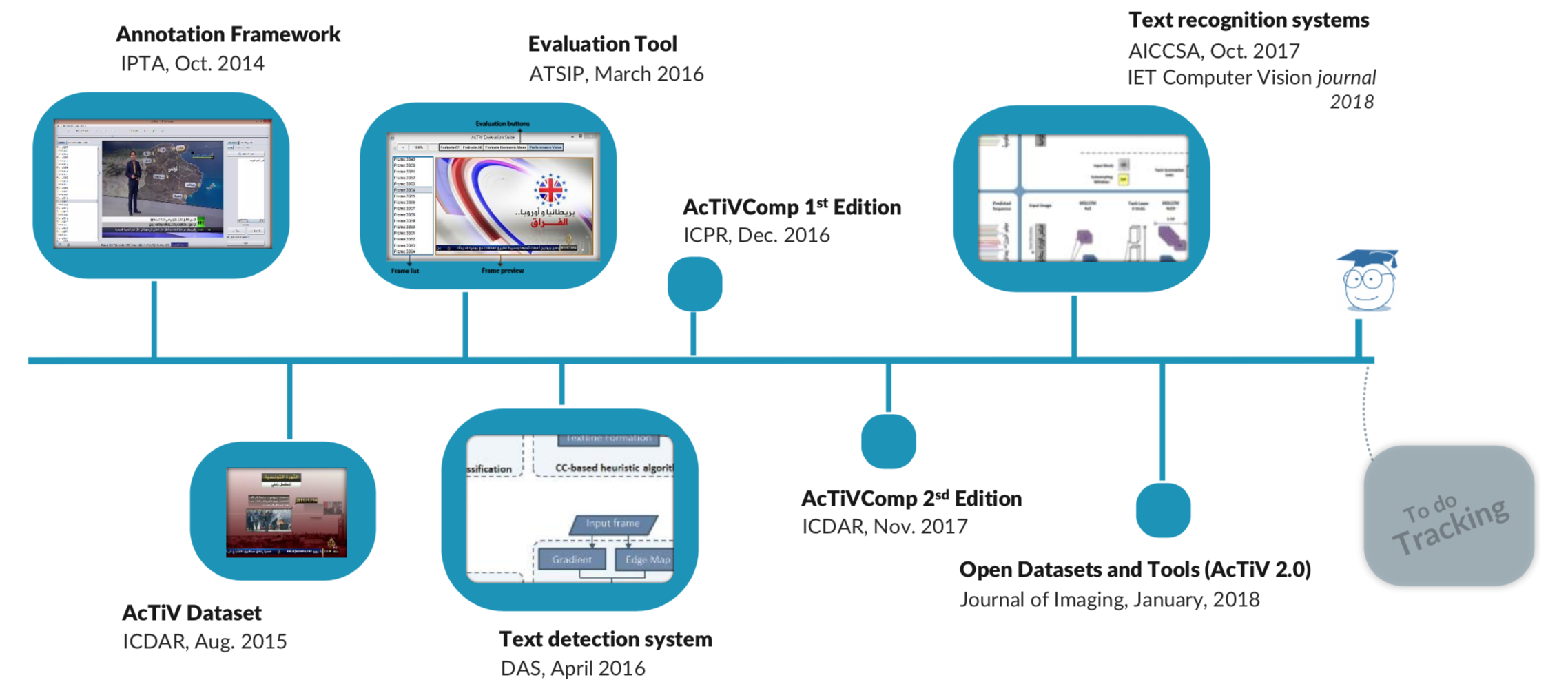

We introduce a two-stage method for Arabic text detection in video frames. In the first stage, which represents the CC-based detection part of this method, text candidates are firstly extracted, then filtered and grouped by respectively applying the Stroke Width Transform (SWT) algorithm, a set of heuristic rules and a proposed textline formation technique. In the second stage, which represents the machine-learning verification part, we make use of Convolutional Auto-Encoders (CAE) and Support Vector Machines (SVM) for text/non-text classification.

For text recognition, we adopt a segmentation-free methodology using multidimensional Recurrent Neural Networks (MDRNN) coupled with a Connectionist Temporal Classification (CTC) decoding layer. This system includes also a new preprocessing step and a compact representation of character models. We aim in this thesis to stand out from the dominant methodology that relies on hand-crafted features by using different deep learning methods, i.e. CAE and MDRNNs to automatically produce features.

Initially, there has been no publicly available dataset for artificially embedded text in Arabic news videos. Therefore, creating one is unquestionable. The proposed dataset, namely AcTiV, contains 189 video clips recorded from a DBS system to serve as a raw material for creating 4,063 text frames for detection tasks and 10,415 cropped text-line images for recognition purposes. AcTiV is freely available for the scientific community. It is worth noting that the dataset was used as a benchmark for two international competitions in conjunction with the ICPR 2016 and ICDAR 2017 conferences, respectively.